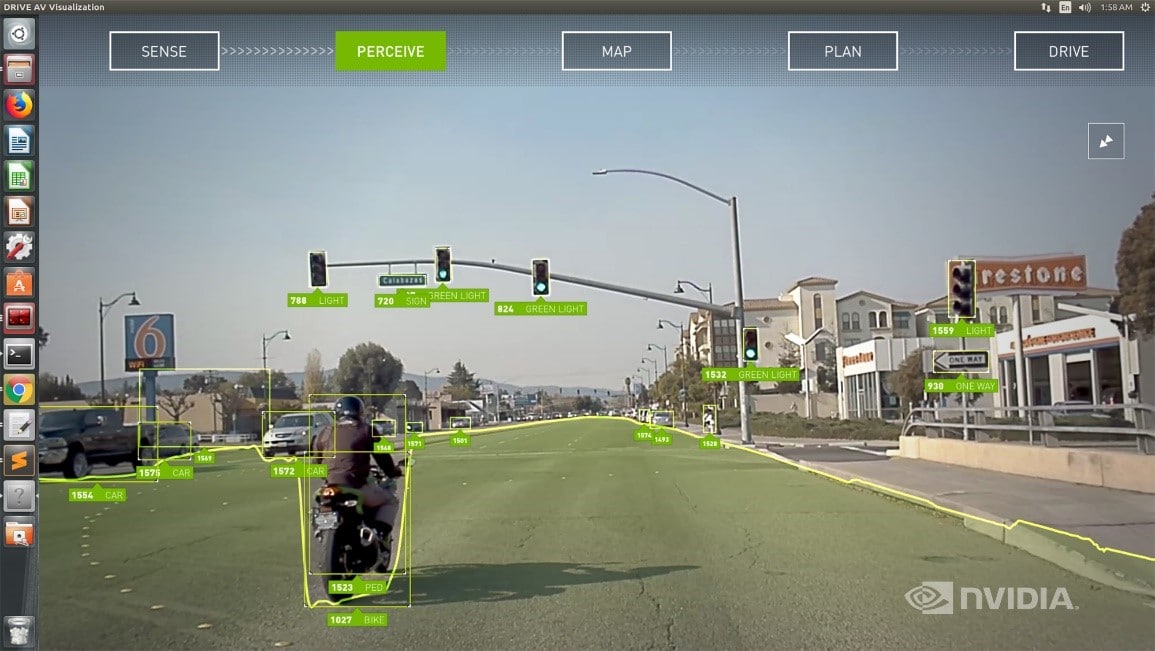

Nikki X-TECH_2023.9.6 NVIDIA Expands Software to Spread Generated AI NVIDIA of the United States is focusing on expanding software that can easily use generated AI (artificial intelligence) in autonomous driving and expanding and selling GPUs. It is also strengthening its response to the industrial metaverse using realistic 3D computer graphics (3DCG). It is expected that NVIDIA’s advancement will continue with generative AI or GPU products for industrial metaverse. The international conference ‘SIGGRAPH 2023’ held in August 23. Although it is a prominent international conference in the field of graphic technology and interaction technology, these days, there are a series of announcements related to AI technology and application of AI technology. This is because the use of AI technology is becoming important in both graphics and interaction. In the field of graphics, AI technology is used to process images easily or automatically generate other images. In the field of interaction, AI technology is also used to recognize hand gestures and facial movements. The evolution of graphic technology is promoting the use of industrial metaverse such as digital twins. Against this backdrop, the topics of the keynote lecture hosted by Jensen Huang, founder and CEO of NVIDIA, were diverse, including GPU products and software exclusively for generating AI, and new functions for industrial metaverse. Among them, five notable ones are introduced. ① Customizing generated AI easily. The tool foundation developed by NVIDIA for the purpose of expediting the development of generated AI for enterprises is ‘AI Workbench’. Testing or customization can be performed using a large-scale language model (LLM) for generated AI that has been trained in advance. It is assumed that AI models located on software development sharing site ‘GitHub’ and ‘NVIDIA NGC’ and ‘Hugging Face’, which contain various AI-related software, are used. Hugging Face is a service that shares trained AI models and data sets like GitHub in the field of generated AI. The characteristic of AI Workbench is that it is possible to easily customize generated AI models on PCs or workstations in hand. Through a graphical user interface (GUI), which is easy to understand, work efficiency can be improved. It is said that developers can customize generated AI with just a few clicks. To make it easier to deploy customized or created AI models on a large scale in a practical environment, the software-based ‘AI Enterprise’ for enterprises has been updated from ‘3.0’ to ‘4.0’. It supported ‘NeMo’, which allows LLM to be customized according to various purposes, and the functions to maximize server processing performance and utilization rate in the entire cloud environment such as multi-cloud, hybrid cloud, and data center. NeMo had been provided by NVIDIA in the past, but as the quality of the enterprise became available in AI Enterprise. With ② Generative AI, collaboration with content companies is also continuing in the field of collaboration generation AI, and its achievements have been revealed. For example, in collaboration with a content seller, Shutterstock in the United States, NVIDIA’s AI ‘Picasso’, which generates images, videos, and 3D content, was used to generate a 360-degree environmental map of 8K images. It is said that they learned with licensed materials in Shutterstock.Autodesk in the U.S. announced that it will integrate the content creation function of AI developed using Picasso’s AI model into its 3D CG processing software, Maya. It also announced a partnership with Hugging Face in the U.S., which is developing Hugging Face. Hugging Face users will be able to build their own AI models using NVIDIA’s DGX Cloud. 3 In the automobile field, we also introduced a case in which performance-generating AI is spreading through the use of generative AI. For example, NVIDIA’s simulator ‘DRIVE Sim’ for autonomous driving development used ‘NeRF (Neural Radiance Field)’, which generates 3D images from multiple 2D images, to appeal that autonomous driving tests can be conducted by reflecting sensor data acquired from actual vehicles in the environment in the simulator. Toyota Motor Group also explained that it has begun to use generative AI. For example, Toyota Research Institute (TRI), a U.S. R&D subsidiary of Toyota, is conducting research that uses generative AI in automobile design or applying diffusion models to engineering design. The function of ‘Omniverse’, a tool-based tool for industrial metaverse, has also been updated to promote the use of the scene technology language ‘USD’. The key is to strengthen the functions related to the 3D scene technology language ‘Universal Scene Description (USD). In the case of 3D CG production such as architecture and games, USD is adopted as a standard format (format) so that multiple users can work together while using different production tools. Since USD is an open source, even if it has a USD name, it is said that there have been difficulties in interoperability since each company has subtly different specifications. Therefore, through industry organizations, we are promoting the improvement of interoperability and the enhancement of functions as ‘Open Universal Scene Description (OpenUSD).In addition to responding to OpenUSD, Omniverse also introduced new features such as ChatUSD, which answers USD-related questions in chat, generates sample codes, and RunUSD, which uploads and renders created USD content to the cloud, or reports whether the content is in compliance with OpenUSD. ⑤ A new semiconductor product has also been announced for high-speed memory adoption for generating AI. We have newly prepared the GH200 Grace Hopper Superchip that adopts high-speed memory. Major manufacturers will start offering systems that adopt this product in the second quarter of 2014. Grace Hopper Superchip is expected to offer Arm